The Dark Underbelly of AI

Opinion, 21 April 2024

by L.A. Davenport

Around this time last year, I wrote a column bemoaning the typical and rather lamentable human trait of investing novel technologies with far more importance and significance than they, in reality, merit.

I said at the time that ChatGPT, which (if by some miracle you haven’t heard of it) is an almost ubiquitous artificial intelligence (AI) tool, is currently little more than a parlour game, but nevertheless has the power to serve as a potential add-on to minor work tasks or as a starting point for research, if used correctly and in the spirit in which it was intended.

I even had a very interesting debate with Damon Freeman, founder and creative director of the design agency Damonza, about the use of AI-derived images for use in book covers (not in this case via ChatGPT, but with similar tools for creating visuals).

However, and as we so often do, individuals and businesses have taken this unfinished, rough and frankly unreliable product and tried to apply it in every which way to every which task they can think of, with no apparent thought to the accuracy of the results nor the consequences of their actions.

Worse than that has been the relentless drive, driven purely by cost-saving and profit-seeking, to employ AI to perform many of the perfunctory and apparently useless aspects of professional jobs and roles (although I have argued that mundane tasks can be extremely beneficial both for skill-building and as a safety valve for the mind).

This blatant over-reach of AI is intended as an excuse eventually to lay off huge swathes of white collar workers, a process that has already started in some areas. (The law profession is often cited as a classic example, although the limits of AI in filing legal briefs have been swiftly and brutally exposed).

While this is all rather concerning and makes one fret for one’s own career—mine as a journalist included (see next week’s column)—I realised recently, and frankly should have expected, that there is a dark underbelly to all of this swift and misplaced reliance on AI and all its multiple manifestations.

I said at the time that ChatGPT, which (if by some miracle you haven’t heard of it) is an almost ubiquitous artificial intelligence (AI) tool, is currently little more than a parlour game, but nevertheless has the power to serve as a potential add-on to minor work tasks or as a starting point for research, if used correctly and in the spirit in which it was intended.

I even had a very interesting debate with Damon Freeman, founder and creative director of the design agency Damonza, about the use of AI-derived images for use in book covers (not in this case via ChatGPT, but with similar tools for creating visuals).

However, and as we so often do, individuals and businesses have taken this unfinished, rough and frankly unreliable product and tried to apply it in every which way to every which task they can think of, with no apparent thought to the accuracy of the results nor the consequences of their actions.

Worse than that has been the relentless drive, driven purely by cost-saving and profit-seeking, to employ AI to perform many of the perfunctory and apparently useless aspects of professional jobs and roles (although I have argued that mundane tasks can be extremely beneficial both for skill-building and as a safety valve for the mind).

This blatant over-reach of AI is intended as an excuse eventually to lay off huge swathes of white collar workers, a process that has already started in some areas. (The law profession is often cited as a classic example, although the limits of AI in filing legal briefs have been swiftly and brutally exposed).

While this is all rather concerning and makes one fret for one’s own career—mine as a journalist included (see next week’s column)—I realised recently, and frankly should have expected, that there is a dark underbelly to all of this swift and misplaced reliance on AI and all its multiple manifestations.

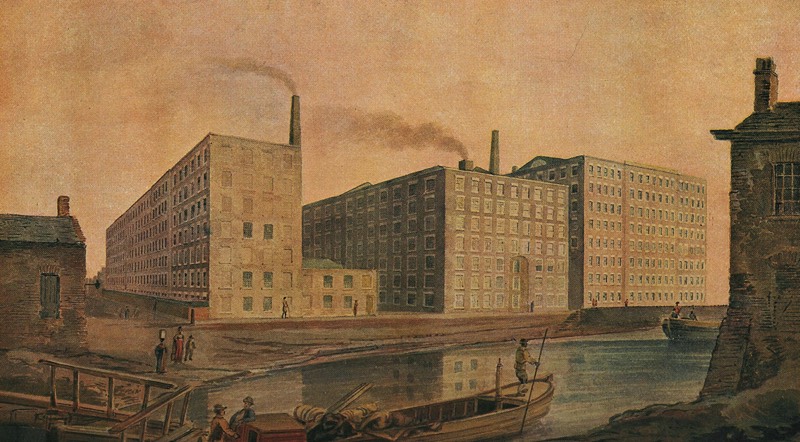

While recently beguiling a few hours in a Budapest café, I caught up on a recent issue of Prospect magazine. In it, Ethan Zuckerman offers up a parallel between the industry behind the shiny modern ‘magic’ of AI and the cotton mills of 19th century England, arguing that we are relying on workers toiling away out of sight.

He writes that advocates for AI tools “promise they will transform, well, pretty much everything from how we search for information, book a trip or shop for clothes, to how we organise our workplaces and wider society.”

But, much as the sheer bulk of the Victorian power looms obscured the sight of children reaching into the machines and “mending broken threads, the impressive achievements of these massive [AI] systems tend to blind us to the human labour that makes them possible."

Zuckerman explains that image-generation programs, for example, rely “on massive sets of labelled data…all carefully labelled by humans.”

He says that Josh Dzieza, writing in Verge, “interviewed some of the thousands—possibly millions—of workers who label these images from their computers in countries like Kenya and Nepal for as little as $1.20 an hour.”

Zuckerman continues: “While these annotators art on the frontlines of feeding the machine, other human contributors may not be aware they are part of the Al supply chain.”

He cites work by the Washington Post and the Allen Institute for AI, who analysed Common Crawl, “a giant set of data that includes millions of publicly accessible websites,” and is the main source material for Google's TS and Facebook's LLaMA. (He points out that ChatGPT won’t say what data they used to train their model.)

While Common Crawl includes may respected sites and newspapers, Zuckerman says there is “weird stuff in their too,” such as his personal blog, much of Reddit, and a “notorious repository of pirated books,” including those by JK Rowling and Hannah Arendt.

He writes that advocates for AI tools “promise they will transform, well, pretty much everything from how we search for information, book a trip or shop for clothes, to how we organise our workplaces and wider society.”

But, much as the sheer bulk of the Victorian power looms obscured the sight of children reaching into the machines and “mending broken threads, the impressive achievements of these massive [AI] systems tend to blind us to the human labour that makes them possible."

Zuckerman explains that image-generation programs, for example, rely “on massive sets of labelled data…all carefully labelled by humans.”

He says that Josh Dzieza, writing in Verge, “interviewed some of the thousands—possibly millions—of workers who label these images from their computers in countries like Kenya and Nepal for as little as $1.20 an hour.”

Zuckerman continues: “While these annotators art on the frontlines of feeding the machine, other human contributors may not be aware they are part of the Al supply chain.”

He cites work by the Washington Post and the Allen Institute for AI, who analysed Common Crawl, “a giant set of data that includes millions of publicly accessible websites,” and is the main source material for Google's TS and Facebook's LLaMA. (He points out that ChatGPT won’t say what data they used to train their model.)

While Common Crawl includes may respected sites and newspapers, Zuckerman says there is “weird stuff in their too,” such as his personal blog, much of Reddit, and a “notorious repository of pirated books,” including those by JK Rowling and Hannah Arendt.

It is frankly chilling to think of how we can blind ourselves to the realities of so much of what we take for granted, and assume that blatant abuses of human rights and copyright law are a thing of the past, or simply not possible in our modern, brightly lit world.

Obviously I had wondered how on earth all of those online pictures used as the basis of AI-generated images could have been analysed and tagged before being fed into the system, when it is obvious that so much of the internet is not particularly well characterised, and was certainly not set up so as to be mined by an algorithm.

I had, naïvely it turned out, assumed that it happened automatically. But of course not.

Zuckerman’s column also reinforces the idea that we should, at the very least, be wary of the results that we get from AI tools, and certainly not use them for serious purposes, as his examples underline how unscrupulous and cavalier the developers were in building the tools in the first place.

After all, if they are problematic in that way, then why not in every aspect?

As I said in my previous column, the problem is, in one sense, not with ChatGPT, as it is designed merely as a shop window for whatever AI business the developers want to make the ‘real’ money from, but rather us taking it so seriously and investing it with an importance and stature that it does not merit.

Next week, I shall continue with this topic and look at how the much-discussed threat to swathes of professional jobs is playing out in real life, using my own example of journalism and that of the coder, a job once seen as so secure that it could serve a potential savour for society, or at least a potential cure for homelessness.

Obviously I had wondered how on earth all of those online pictures used as the basis of AI-generated images could have been analysed and tagged before being fed into the system, when it is obvious that so much of the internet is not particularly well characterised, and was certainly not set up so as to be mined by an algorithm.

I had, naïvely it turned out, assumed that it happened automatically. But of course not.

Zuckerman’s column also reinforces the idea that we should, at the very least, be wary of the results that we get from AI tools, and certainly not use them for serious purposes, as his examples underline how unscrupulous and cavalier the developers were in building the tools in the first place.

After all, if they are problematic in that way, then why not in every aspect?

As I said in my previous column, the problem is, in one sense, not with ChatGPT, as it is designed merely as a shop window for whatever AI business the developers want to make the ‘real’ money from, but rather us taking it so seriously and investing it with an importance and stature that it does not merit.

Next week, I shall continue with this topic and look at how the much-discussed threat to swathes of professional jobs is playing out in real life, using my own example of journalism and that of the coder, a job once seen as so secure that it could serve a potential savour for society, or at least a potential cure for homelessness.

Back to writing. While I eagerly await the blog tour for Escape, The Hunter Cut, starting on May 6, which is also the publication date of the book, things have not been quiet here.

This week I published two more parts (Part II and Part III) to my story The White Room, which originally started out as a script for a short film but was rewritten for my third collection, No Way Home.

The interval between the first part being put up on here and the arrival of these next two instalments is rather embarrassingly long, but I can assure you you will not have to wait for the two final episodes.

Work also continues apace on finalising the layout of my next collection, Pushing the Wave 2017–2022, which picks the best pieces from the first five years of this site and combines them with photographs and images in a way that is more like a cross between an art book and an annual than a traditional book.

I hope you will enjoy them all.

This week I published two more parts (Part II and Part III) to my story The White Room, which originally started out as a script for a short film but was rewritten for my third collection, No Way Home.

The interval between the first part being put up on here and the arrival of these next two instalments is rather embarrassingly long, but I can assure you you will not have to wait for the two final episodes.

Work also continues apace on finalising the layout of my next collection, Pushing the Wave 2017–2022, which picks the best pieces from the first five years of this site and combines them with photographs and images in a way that is more like a cross between an art book and an annual than a traditional book.

I hope you will enjoy them all.

© L.A. Davenport 2017-2024.

0 ratings

Cookies are used to improve your experience on this site and to better understand the audience. Find out more here.

The Dark Underbelly of AI | Pushing the Wave